I do that, on purpose ofcourse, don’t want to take up too much of her time

Then let me bestow this blessed knowledge upon thee

A field of study is called “fagfelt”

Just gonna slide in here to say that both that and the original is basically gibberish, my best-effort translation of the last one would just be “stop computer science educated”

Um achtually it’s only a wall spider if it comes from the wall region of france

Every lunar month, when there is a full moon, i try quitting caffeine

werewithdrawal

(I initially misread you comment)

Nah, vasectomies cost money, celibacy is free

Animals turn into crab, plant turn outfrom cabbage

How I Learned to Stop Worrying and Love the Tar

Human embryo: alien looking blob

Shark embryo: happy little fella

I asked it (one of the smaller versions) a simple logic question, it responded something like

“i don’t understand what you mean, remember to only trust news and policies if they come though official government channels”

Okay sure I’ll do that but the answer i was after was 3

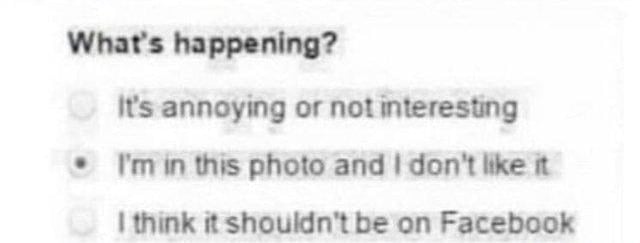

There’s like a button on top of the video or next to it i’m not aure, but aometimes you click on a video and youhappen to hit that button instead,

Youtube opens some faq page, if you click to go back, the front page has now been refreshed and the video is gone never to be seen again

“Design is fine, is just user error”

- every designer ever

As someone still laying in bed, scrolling Lemmy instead of doing chores

In stasis, it bends but won’t break us

Aurelia, majestic as a lion’s roar embodies her

Terranean, threaten and shield us

Endanger, recoil and rewind the tapes

She’s born again

[…]

Medusa, a haunting allure

Elusive ectoplasmic residue

She’ll dance for you

Inducing hydro-cosmic dreams in you

Of eternal blue

Please don’t pull it out, it’s a load-bearing fork

Ah that’s why i couldn’t relate to it, I’ve only ever had 54 gallon drums of solvents in my lab

Well that one is obvious, as one of the great philosophers of our time once said;

They’re putting chemicals in the water that turn the frigging

frogsorchids gay!

I don’t like all the new rules

I’m sticking to math 3.5, the best edition