I have currently a RX 6700XT and I’m quite happy with it when it comes to gaming and regular desktop usage, but was recently doing some local ML stuff and was just made aware of huge gap NVIDIA has over AMD in that space.

But yeah, going back to NVIDIA (I used to run 1080) after going AMD… seems kinda dirty for me ;-; Was very happy to move to AMD and be finally be free from the walled garden.

I thought at first to just buy a second GPU and still use my 6700XT for gaming and just use NVIDIA for ML, but unfortunately my motherboard doesn’t have 2 PCIe slots I could use for GPUs, so I need to choose. I would be able to buy used RTX 3090 for a fair price, since I don’t want to go for current gen, because of the current pricing.

So my question is how is NVIDIA nowadays? I specifically mean Wayland compatibility, since I just recently switched and would suck to go back to Xorg. Other than that, are there any hurdles, issues, annoyances, or is it smooth and seamless nowadays? Would you upgrade in my case?

EDIT: Forgot to mention, I’m currently using GNOME on Arch(btw), since that might be relevant

It depends. GNOME on Wayland + Nvidia runs great. But if you try the tiling manager camp, you will run into several issues in sway, hyprland. Things like having to use software mouse because insert nvidia excuse and high cpu usage by just moving the mouse.

Well… I don’t know, I would recommend GNOME on Wayland or maybe KDE, haven’t tried the latest Plasma 6 release, but outside that, avoid it.

high cpu usage by just moving the mouse.

This sounds like co-operative multi-tasking on a single CPU. I remember this with Windows 3.1x around 30 years ago, where the faster you moved your mouse, the more impact it would have on anything else you were running. That text scrolling too fast? Wiggle the mouse to slow it down (etc, etc).

I thought we’d permanently moved on with pre-emptive multi-tasking, multi-threading and multiple cores… 🤦🏼♂️

I don’t have an answer to your nvidia question, but before you go and spend $2000 on an nvidia card, you should give the rocm docker containers a shot worh your existing card. https://hub.docker.com/u/rocm https://github.com/ROCm/ROCm-docker

it’s made my use of rocm 1000x easier than actually installing it on my system and was sufficient for my uses of running inference on my 6700xt.

Better, but still shit. The main holdup right now to what I see is wayland-protocols and the WMs adding Explicit Sync support as the proprietary driver does not have implicit sync support. Its part of a larger move for the graphics stack to move to explicit sync:

https://gitlab.freedesktop.org/wayland/wayland-protocols/-/merge_requests/90

Once this is in, the flickering issues will be solved and NVIDIA wayland being a daily driver in most situations

Yeah, was just reading about it and it kind of sucks, since one of the main reasons I wanted to go Wayland was multi-monitor VRR and I can see it is also an issue without explicit sync :/

Yeah. I have a multi-monitor VRR setup as well and happened to have a 3090 and not being able to take advantage of Wayland really sucks. And its not like Xorg is any good in that department either so you’re just stuck between a rock and a hard place until explicit sync is in

Lets see what will happen first- me getting a 7900xtx or this protocol being merged

Now I’m actually considering that one as well. Or I’ll wait a generation I guess, since maybe by then Radeon will at least be comparable to NVIDIA in terms of compute/ML.

Damn you NVIDIA

Yes, I am pretty much in the same boat, running Linux as a daily driver and currently having an ancient AMD GPU and was thinking to buy NVIDIA for exactly ML but I really don’t want to give them my money, as I dislike the company and their management but AMD is subpar in that department, so not much of a choice

I have an Nvidia 3080TI and an AMD RX 8900 XTX.

The AMD runs great on any distro, I love it. The Nvidia is so much of a huge pain that I installed Windows on that PC.

Are you from the future? Please tell us more about the RDNA4 architecture if so :)

Plasma 6(arch) is pretty excellent. There is the bug mentioned in other comments with Xwayland that won’t be (fully) fixed until the Explicit sync wayland protocol is finalized and implemented, but that should apply to any wayland compositor.

As to wayland vs x11, if you want to game or anything else that is only X11, use X11, otherwise most everything else can be use wayland.

Same here, but it turned out a lot of frameworks like tensorflow or pytorch do support AMD ROCm framework. I managed to run most models just by installing a rocm version of these dependencies instead of the default one.

Yeah, I’m currently using that one, and I would happily stick with it, but it seems just AMD hardware isn’t up to par with Nvidia when it comes to ML

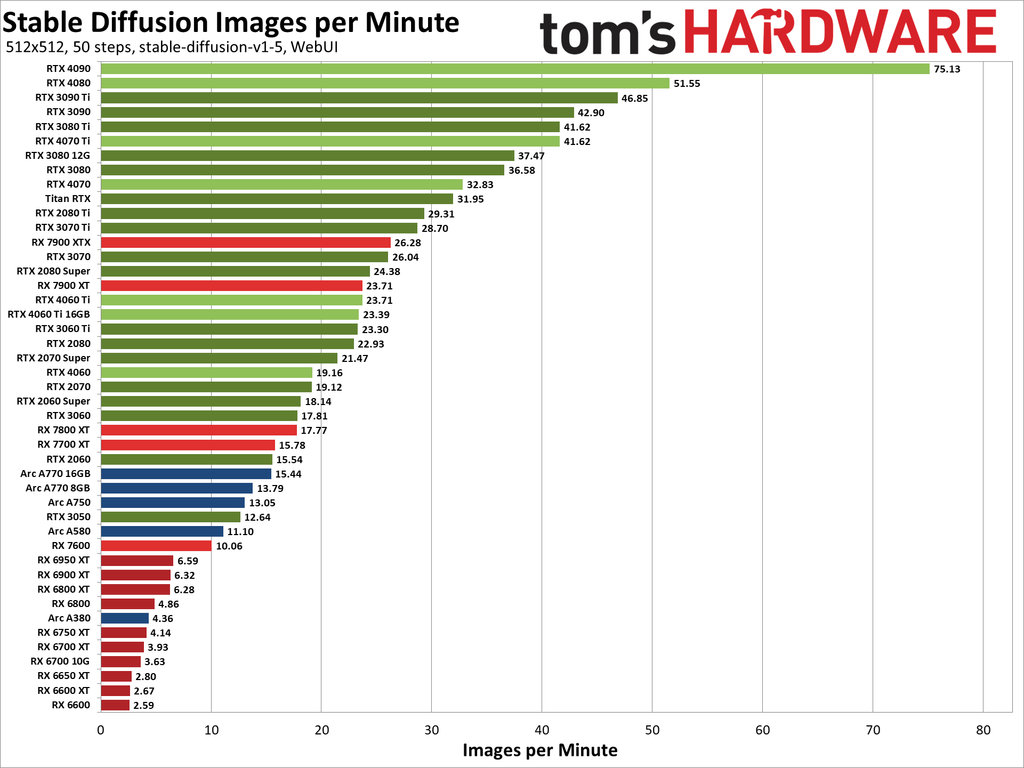

Just take a look at the benchmarks for stable diffusion:

Aren’t those things written specifically for nVidia hardware? (I used to e a developer, but this is not at all my area of expertise)

Fan control is either impossible or a pain in the ass since stuff like Green With Envy use something only available on x11. For me, it means my GPU fans spin up and down repeatedly at idle because the minimum fan speed is something like 33% and I can’t pin it there without a program to do so, let alone set reasonable fan curves.

nvidia-settings and https://github.com/Lurkki14/tuxclocker support fan control on Wayland