Suppositories tell all. But remember, more than two and you’re just playing with yourself

Suppositories tell all. But remember, more than two and you’re just playing with yourself

Tangent, how would this telescope do turned around and pointed at our deepest oceans? There’s infinitely more alien life to actually find and study, and it’s still unstudied for good reasons I suppose I’m fishing for.

I have a couple different units. Both recommendable, sub $100 range. Magnetic flaps are handy for slapping them to sheet metal like case sides. One is a trifold, the other a bifold. They stack and sit side by side nicely in many arrangements. Usb-c power pass through lets you Daisy chain a single high current input, they’re pretty slick. I’ve had both of the screens and a gl.inet travel router on a single power feed Daisy chained in series, no issues. Oh man, touch screen though? Have no experience unfortunately.

This is literally fun to these people. Foaming at the mouth gives them energy, it’s all they can run on anymore. Vapid angst.

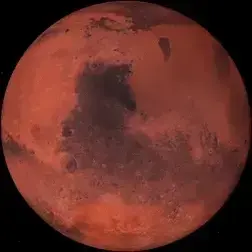

This is high quality. AI? Can’t hate it either way

Like building a fucking train station to return to? Lol

People who aren’t dead inside, for aforementioned reasons

deleted by creator

What a treat! I just got done setting up a second venv within the sd folder. one called amd-venv the other nvidia-venv. Copied the webui.sh and webui-user.sh scripts and made separate flavors of those as well to point to the respective venv. Now If I just had my nvidia drivers working I could probably set my power supply on fire running them in parallel.

I might take the docker route for the ease of troubleshooting if nothing else. So very sick of hard system freezes/crashes while kludging through the troubleshooting process. Any words of wisdom?

Since only one of us is feeling helpful, here is a 6 minute video for the rest of us to enjoy https://www.youtube.com/watch?v=lRBsmnBE9ZA

I started reading into the ONNX business here https://rocm.blogs.amd.com/artificial-intelligence/stable-diffusion-onnx-runtime/README.html Didn’t take long to see that was beyond me. Has anyone distilled an easy to use model converter/conversion process? One I saw required a HF token for the process, yeesh

Well I finally got the nvidia card working to some extent. On the recommended driver it only works in lowvram. medvram maxes vram too easily on this driver/cuda version for whatever reason. Does anyone know the current best nvidia driver for sd on linux? Perhaps 470, the other provided by the LM driver manager…?

We are not alone then. Thanks for your input!

Do you need to hazardously close to a tower for good stability? Fascinating for the future of wireless power!

Fourier than what?