- 1 Post

- 5 Comments

1·2 months ago

1·2 months agowhich does support the idea that there is a limit to how good they can get.

I absolutely agree, im not necessarily one to say LLMs will become this incredible general intelligence level AIs. I’m really just disagreeing with people’s negative sentiment about them becoming worse / scams is not true at the moment.

I doesn’t prove it either: as I said, 2 data points aren’t enough to derive a curve

Yeah only reason I didn’t include more is because it’s a pain in the ass pulling together multiple research papers / results over the span of GPT 2, 3, 3.5, 4, 01 etc.

24·2 months ago

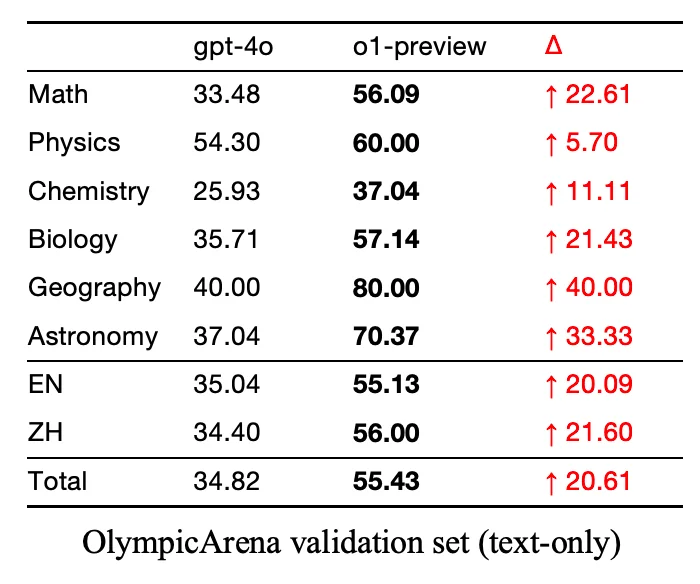

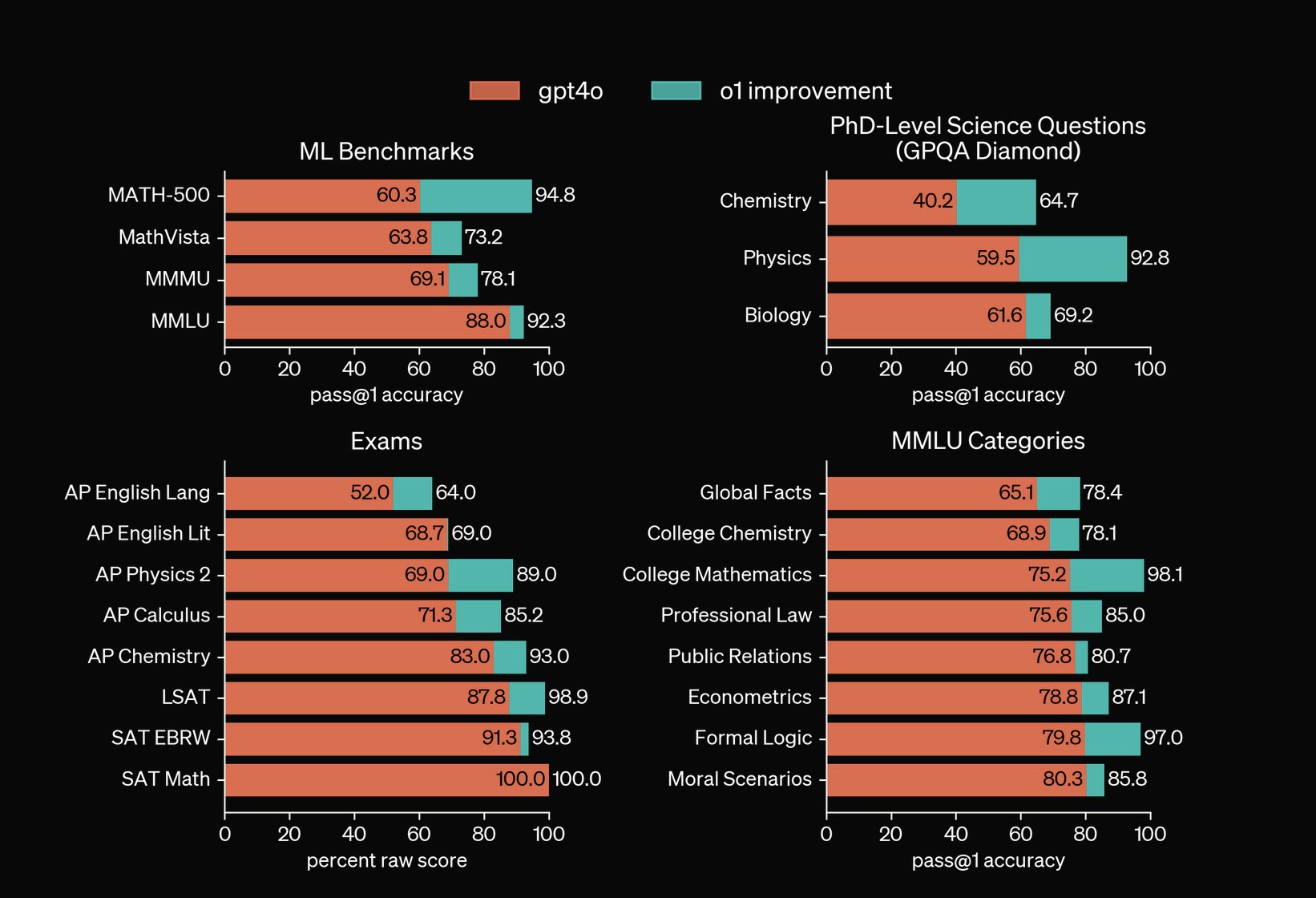

24·2 months agoThe jump from GPT-4o -> o1 (preview not full release) was a 20% cumulative knowledge jump. If that’s not an improvement in accuracy I’m not sure what is.

13·2 months ago

13·2 months ago

Compare the GPT increase from their V2 GPT4o model to their reasoning o1 preview model. The jumps from last years GPT 3.5 -> GPT 4 were also quite large. Secondly if you want to take OpenAI’s own research into account that’s in the second image.

36·2 months ago

36·2 months agoCurious why your perspective is they’re are more of a scam when by all metrics they’ve only improved in accuracy?

Not sure entirely where this fits into this conversation, but one thing I’ve found really interesting that’s discussed in this Convo w Dr. K (I don’t have a timestamp sorry). Tai Chi has much more significant affect on all health perspectives than typical Western running/jogging/yoga etc.

And research papers can note this, but as soon as researchers start attempting to dig into the actual mechanical process behind why it has such a significant affect, their papers will be rejected because it dips too far into Woo/Spiritual territory despite not describing what the woo is, just acknowledging that “something” is there happening.

I think it’s interesting we can measure results and attempt to explain what we’re seeing but western research tends to be so tied to physical mechanisms it has almost started hindering our advancement.